Mean-shift distillation for diffusion mode seeking

Abstract

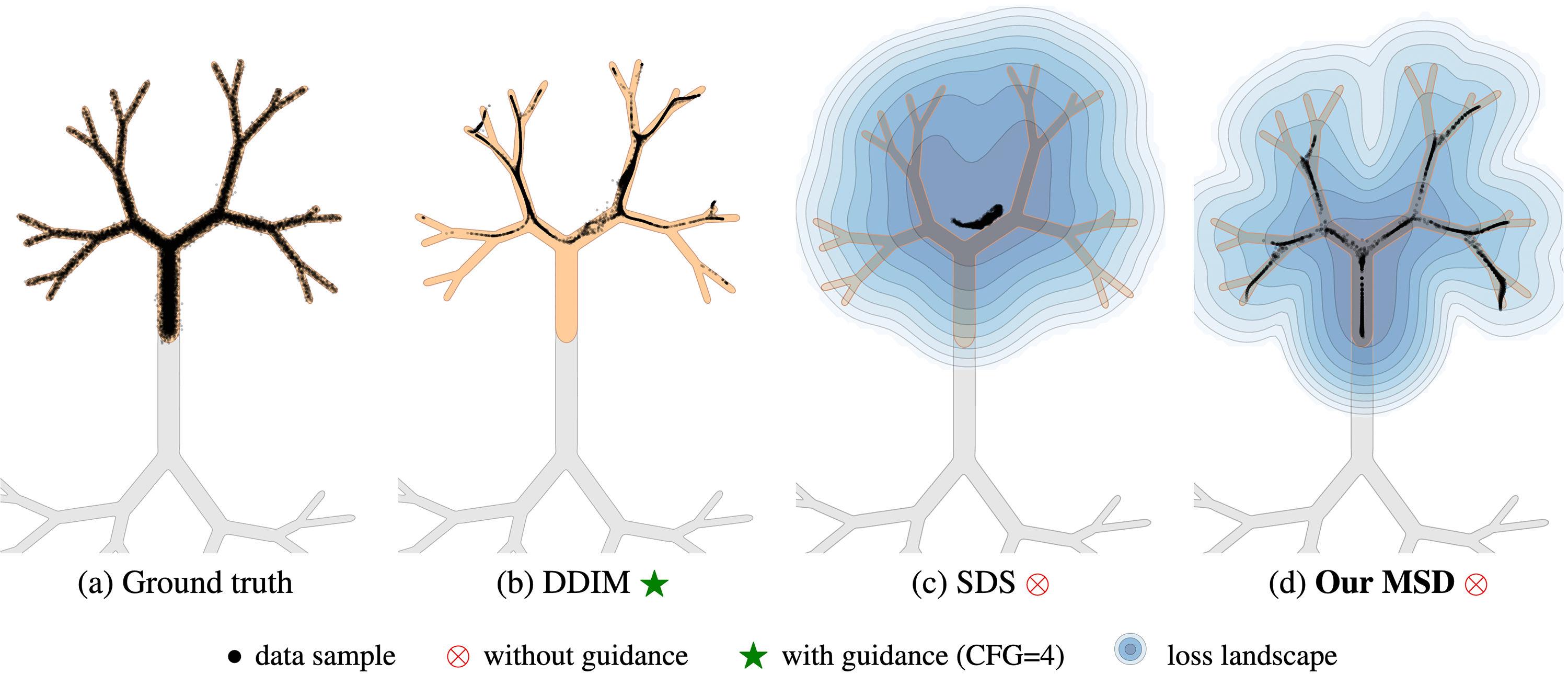

We present mean-shift distillation, a novel diffusion distillation technique that provides a provably good proxy for the gradient of the diffusion output distribution. This is derived directly from mean-shift mode seeking on the distribution, and we show that its extrema are aligned with the modes. We further derive an efficient product distribution sampling procedure to evaluate the gradient.

Our method is formulated as a drop-in replacement for score distillation sampling (SDS), requiring neither model retraining nor extensive modification of the sampling procedure. We show that it exhibits superior mode alignment as well as improved convergence in both synthetic and practical setups, yielding higher-fidelity results when applied to both text-to-image and text-to-3D applications with Stable Diffusion.

Downloads and links

BibTeX reference

@misc{Thamizharasan:2025:MeanShiftDistillation,

title={Mean-Shift Distillation for Diffusion Mode Seeking},

author={Vikas Thamizharasan and Nikitas Chatzis and Iliyan Georgiev and Matthew Fisher and Difan Liu and Nanxuan Zhao and Evangelos Kalogerakis and Michal Lukac},

year={2025},

eprint={2502.15989},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2502.15989},

}