SAMa: Material-aware 3D selection and segmentation

Abstract

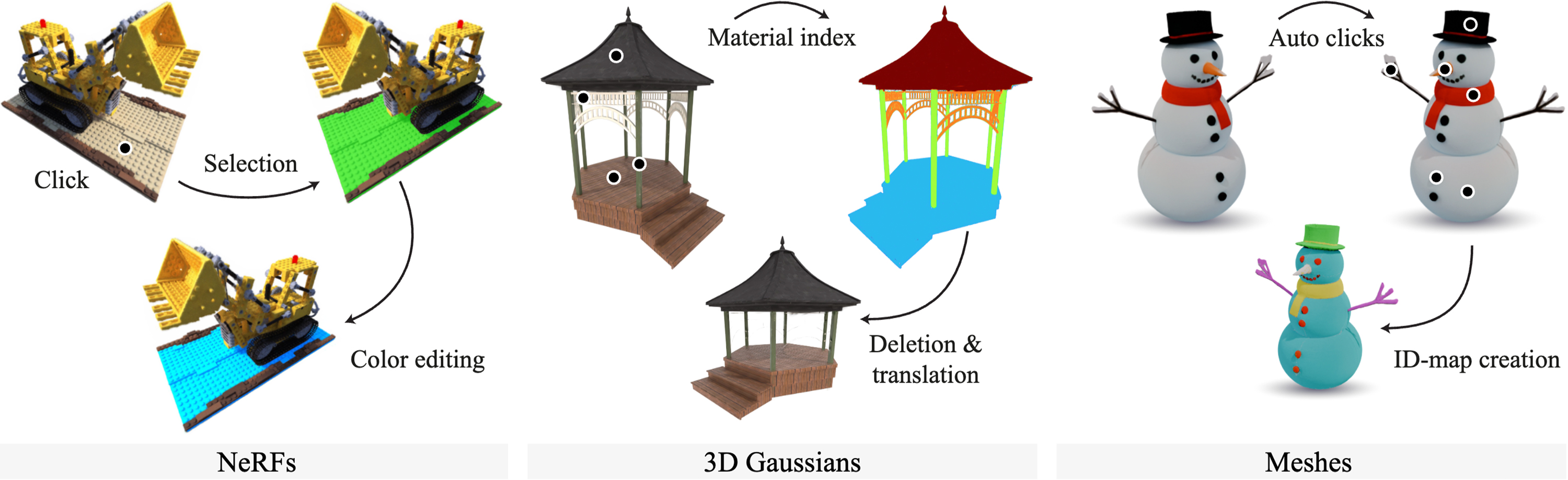

Decomposing 3D assets into material parts is a common task for artists and creators, yet remains a highly manual process. In this work, we introduce Select Any Material (SAMa), a material selection approach for various 3D representations. Building on the recently introduced SAM2 video selection model, we extend its capabilities to the material domain. We leverage the model's cross-view consistency to create a 3D-consistent intermediate material-similarity representation in the form of a point cloud from a sparse set of views. Nearest-neighbor lookups in this similarity cloud allow us to efficiently reconstruct accurate continuous selection masks over objects' surfaces that can be inspected from any view. Our method is multiview-consistent by design, alleviating the need for contrastive learning or feature-field pre-processing, and performs optimization-free selection in seconds. Our approach works on arbitrary 3D representations and outperforms several strong baselines in terms of selection accuracy and multiview consistency. It enables several compelling applications, such as replacing the diffuse-textured materials on a text-to-3D output with PBR materials, or selecting and editing materials on NeRFs and 3D-Gaussians.

Downloads and links

- paper (PDF, 3.8 MB)

- supplemental document (PDF, 6.8 MB)

- Arxiv preprint

- project page

- citation (BIB)

BibTeX reference

@misc{Fischer:2024:SAMa,

title={SAMa: Material-aware 3D Selection and Segmentation},

author={Michael Fischer and Iliyan Georgiev and Thibault Groueix and Vladimir G. Kim and Tobias Ritschel and Valentin Deschaintre},

year={2024},

eprint={2411.19322},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2411.19322},

}